Introduction

According to a recent study by PwC, it is anticipated that Artificial Intelligence (AI) will contribute significantly to the global economy with a boost of approximately $15.7 trillion. As AI continues to play a crucial role in various domains, Generative AI and Large Language Models (LLMs) have emerged as General Purpose Technology and a powerful building block in creating AI-powered applications capable of generating enormous business value and generative AI is the key-element in these kinds of applications.

Problem Statement

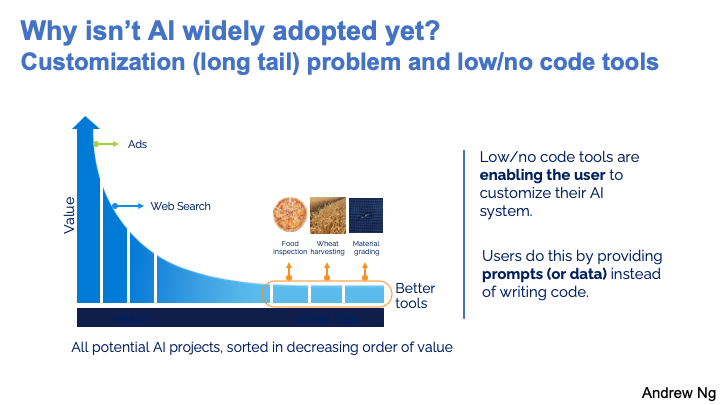

During his recent talk at Stanford University, Andrew Ng, one of the most influential people in the AI field, explained how AI holds a tremendous potential for transforming businesses and driving economic growth. However, widespread adoption of AI outside of Big Tech has witnessed a slow progression. This is because of several reasons:

- Implementing AI requires highly skilled data scientists and engineers, an endeavor that presents a high cost for AI companies operating on a limited budget.

- Applied AI is still a challenge, where the outcome often depends on several experiments to be conducted requiring upfront investment.

- Given that AI has shown promise, many industries outside of Big Tech do not even know which problems could be solved within the realm of AI.

- Small and medium businesses (SMBs) often lack the resources to build custom AI solutions from scratch. Furthermore, even most larger companies struggle and fail to scale AI across the organization due to the complexity of development and deployment of the technology. Last year zillow reported a $880M loss on it’s AI-driven home-flipping technology.

- Last, and the most important roadblock is that most AI platforms today still require coding and knowledge of complex AI algorithms and thereby fail to deliver impactful AI solutions.

Source: Andrew Ng, Stanford GSB Talk on Opportunities in AI, 2023

This presents us an opportunity where we aim to solve this problem by reducing the gap of being able to apply a successful suite of products to the problem with a novel no-code AI platform. Tapping into this long tail requires easy-to-use, no-code AI platforms that enable business users to build, deploy, and manage AI-powered tools without coding. The integration of no-code platforms can accelerate the adoption of AI and reduce the time to realize its benefits. Current notable AI platforms, such as Google Vertex, AWS SageMaker Studio, IBM Watson AI, Microsoft AI Copilot Studio, or Palantir AI Platform (AIP) have significant limitations, such as:

- On-premise hosting limitations: Users are not able to host applications built on these platforms on-premise or in their private environment. Several organizations cannot afford to send their data to a cloud sometimes where even the data centers exist outside their countries.

- Model selection restrictions: The choice of AI models is often limited to the ones available on one platform. For instance, IBM Watson AI doesn’t provide access to models provided by AWS or Microsoft models and vice versa.

- Proprietary nature: These platforms are proprietary in nature, meaning that customization options above the functionality provided are limited.

In summary, there is a need for no-code AI platforms that truly require zero coding. Our platform not only is a no-code platform that can be deployed on-premise, but it also allows easy customization for different use cases, is able to seamlessly integrate data, provide transparent explanations, and enable collaborative development between both business and technical users.

Our Mission

To accelerate adoption of AI for small and medium-sized businesses through pre-built customizable AI solutions and a seamless, no-code AI development platform.

Our Solution

Steve Jobs once said: “The code that's the fastest to write, the code that's the easiest to maintain and the code that never breaks - is the code you don't write.”

A NextGen AI platform

In this context, IngestAI is a full-stack open-source AI platform that enables users without a technical background to leverage the power of Generative AI and Machine Learning (ML). This empowers them to automate various business processes without any coding expertise. IngestAI offers a wide range of potential applications covering areas such as process automation, contextual search, virtual agents, AI copilots, OCR, and more.

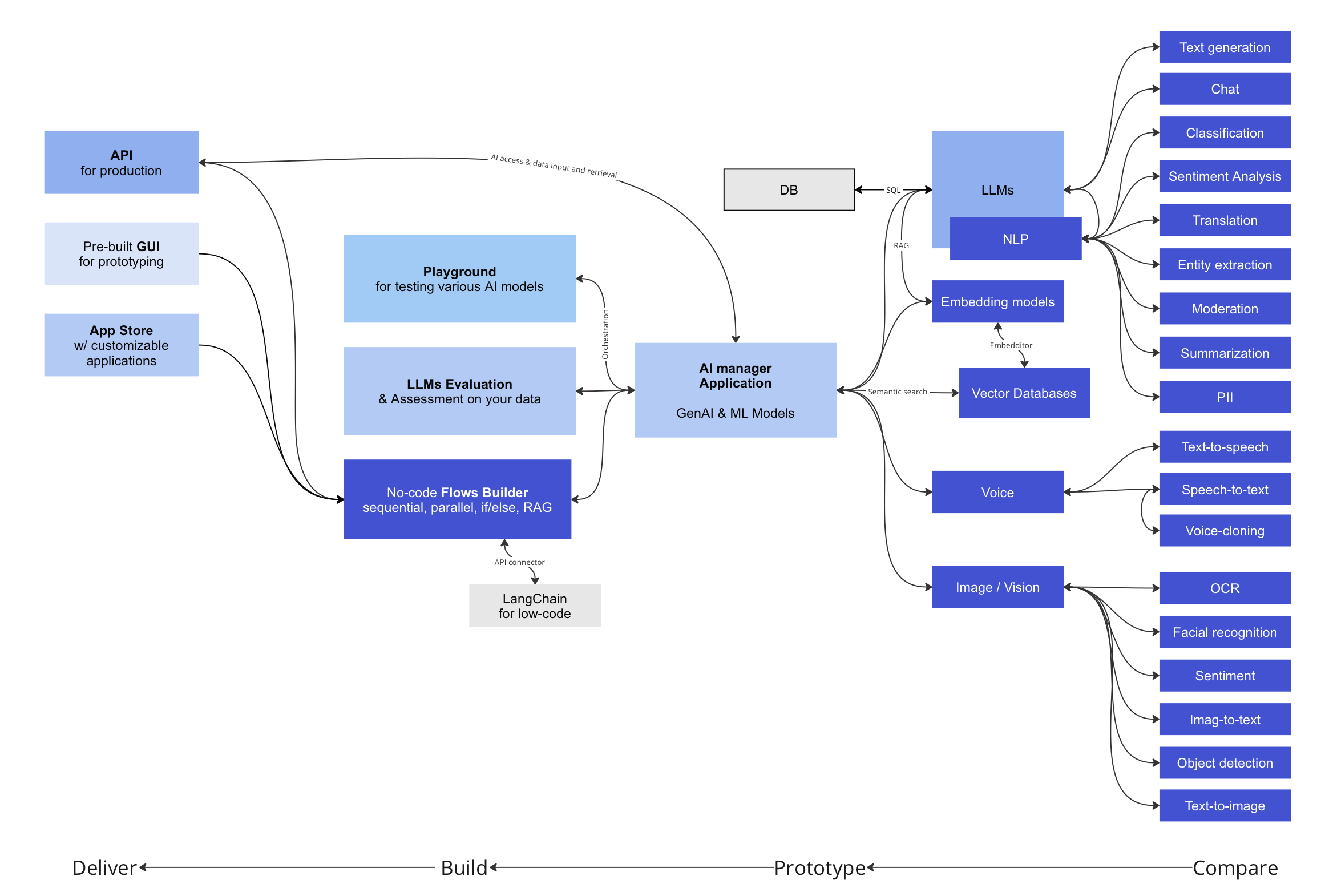

IngestAI ‘marketecture’

AI-in-a-Box

IngestAI is adopting the "AI-in-a-box" approach that allows businesses, especially SMBs, to benefit from custom AI-powered solutions. The platform achieves this by leveraging the best of open-source and proprietary AI models from Google Gemini, OpenAI, AWS, Cohere, Anthropic, and others, without the need to develop own models and applications from scratch. The goal of IngestAI is to make AI more accessible, organized, and useful for not only large enterprises but also SMBs.

Bring-Your-Own-Model (BYOM)

While our platform supports a suite of options from ingestion, modeling to deployment, we allow users to bring their custom model. This allows for customer privacy, where our application can live entirely in the network in a secure manner. This enables trust in the organization to use our platform. We also support logs and if necessary our team can help analyze the logs or on highly private applications can enable in-house data science teams to analyze such logs.

User Adoption

Since its launch in February 2023, IngestAI has quickly gained popularity as a community-driven platform for rapid exploration, prototyping, and deployment of various AI use cases.

The platform has gained a significant industry recognition, including:

- A growing user base with thousands of users

- Acceptance to the StartX AI Series

- ProductHunt Product of the Day

- Selection to the PLUGandPLAY Silicon Valley acceleration program

- Endorsement by the esteemed Cohere Acceleration Program.

In less than one year, IngestAI has amassed four corporate customers, three of which are publicly traded companies. This level of traction attests to the platform's ability to generate tangible business value.

Technology Stack

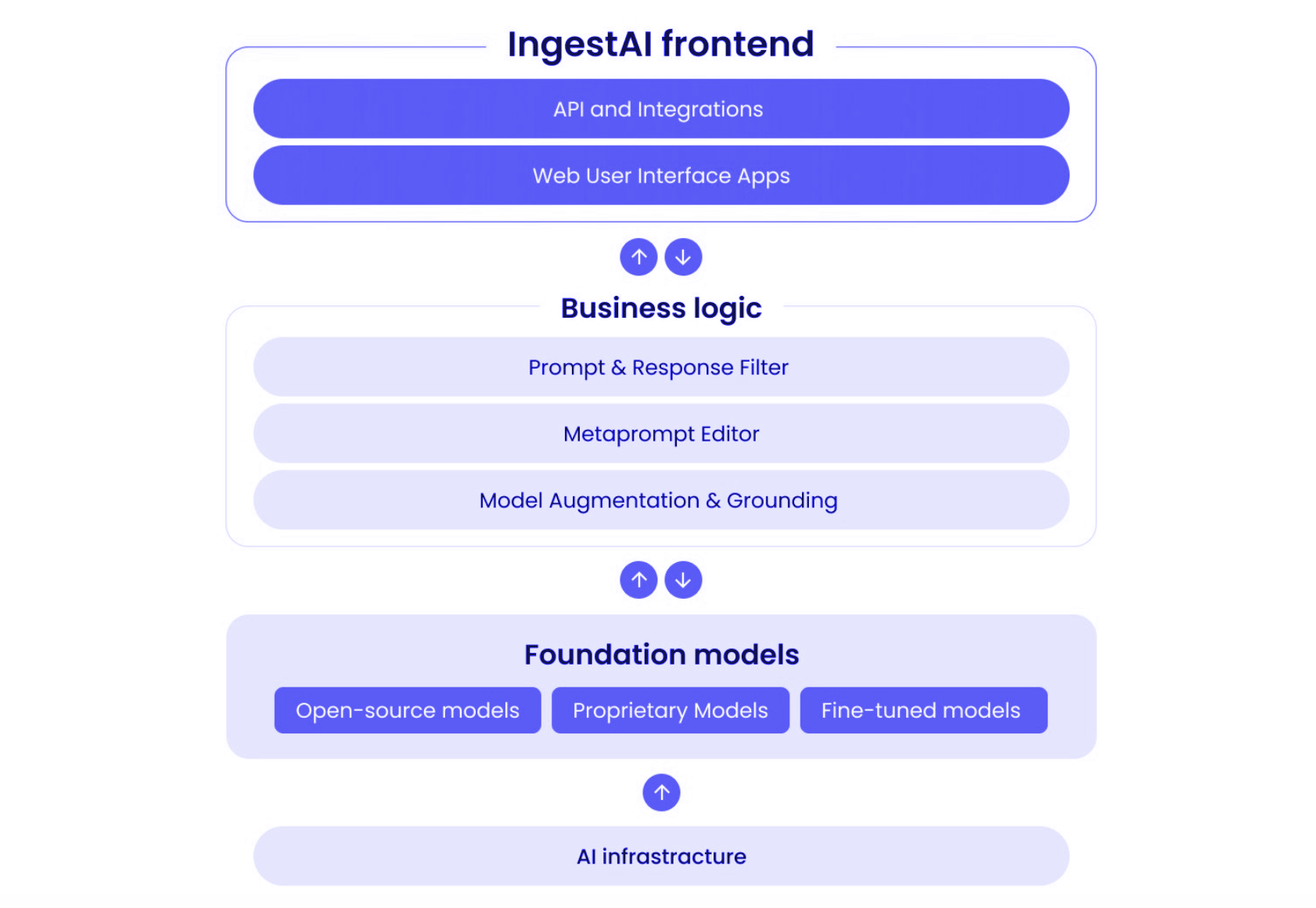

The IngestAI stack consists of three primary layer

- front-end,

- orchestration,

- and foundation models.

Front-End:

The user experience layer focuses on delivering exceptional product experiences that understand user requirements and defines traditional user interface (UI) elements.

Orchestration layer:

The orchestration layer orchestrates the logic and sequencing necessary to interact with models and infrastructure. These layer components include prompt handling, metaprompts, internal knowledge-base (grounding), among others.

Foundation layer:

The foundation models layer provides access to dozens of pretrained models including ChatGPT, Cohere, Anthropic, AI21, and GPT-4, image generation models like Midjourney, DALL-E, or StabilityAI, as well as speech-to-text or voice generation models by AWS, OpenAI, and others.

In summary, the IngestAI stack enables non-technical users to construct conversational AI assistants and more sophisticated Generative AI-powered applications by providing pretrained models, orchestration logic, and infrastructure within an integrated platform.

The IngestAI technology stack

Key Components

Foundation models

IngestAI users have access to several options when it comes to implementing foundation models in their applications. For example, they can choose to use hosted foundation models from Azure, OpenAI, AWS, Google, or Anthropic. They can also fine tune these hosted models to align with their specific requirements. In cases where neither of these options work for their use case, more technically advanced AI developers can bring their own foundation models, including open source models available on platforms such asHugging Face or GitHub. This gives more advanced developers flexibility in how they implement foundation models to power their AI-powered applications.

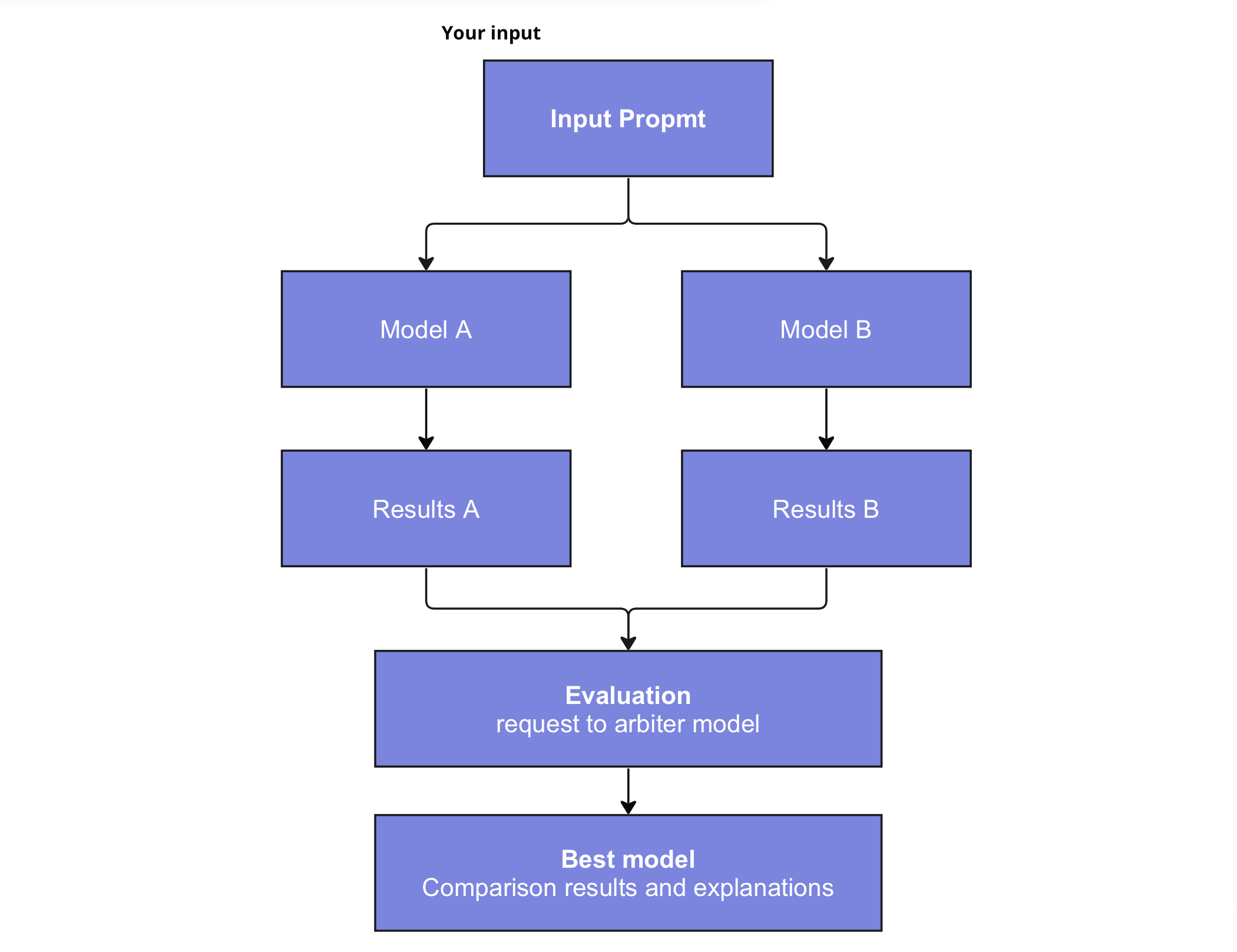

AI Aggregator

The AI Aggregator is a patent-pending application and one of core components of IngestAI that leverages the strengths of Foundation Models to streamline the critical phase in building any AI-powered application: the comparison and selection of AI models. This technology expedites and simplifies this process by combining the outcomes from various Generative AI models and then an arbitrary AI model compares the results. It provides several valuable advantages, such as the ability to easily compare different AI models’ accuracy, diversity in perspectives, bias detection and mitigation, and many more.

A/B testing of LLMs with IngestAI Aggregator

Metaprompt editor

The Metaprompt editor serves as a set of instructions that the user provides to the application. This set of instructions gets passed down to the model to align itself to the application that users are building. In essence, the metaprompt editor is where users shape the model’s personality and impact new skills or capabilities to it. In other words, designing a metaprompt can be likened to a form of fine-tuning allowing users to ‘teach’ the model new capabilities.

Grounding [to internal knowledge-base]

Grounding is all about enhancing the prompt with additional context that may be useful for helping the model respond with a more accurate response. This process involves analyzing the prompt and the user query, followed by issuing a query to a search index to retrieve and inject relevant documents [or part of the document] in the prompt. These identified documents, often in the form of relevant sentences or paragraphs, are then appended to the prompt and sent to the model to provide a more precise answer.

Embedditor

The Embedditor allows users to refine their embedding data via a user-friendly interface. The Embedditor leverages advanced natural language processing (NLP) techniques to cleanse embedding tokens which enhances efficiency and accuracy. It also allows users to optimize vector searches by intelligently splitting or merging content to make text chunks more semantically coherent. Embedditor gives users full control over their data by allowing them to deploy it locally, on their enterprise cloud, or on-premises. Embedditor's unique algorithm can optimize irrelevant and low-relevance tokens from embeddings with just one click and save 15% to 40% of embedding and vector storage costs while also improving search results.

Business logic orchestration layer

The Business logic orchestration layer serves as a pivotal component of the IngestAI platform. Its primary role involves managing the business logic and flow of the application. This orchestration layer is fundamental for sequencing AI interactions, to proficiently handle tasks such as prompting, filtering, grounding, integrating, and AI models augmentation. The Business logic orchestration layer provides a framework for organizing and controlling the interaction between the user and the AI models, ensuring a smooth and effective user experience. The orchestration layer also allows for the use of integrations with other tools and applications to extend the capabilities of the copilot application.

Integrating with messaging platforms like Slack, Discord, WhatsApp, Zoom, or Microsoft Teams allows users to set up IngestAI chatbots and virtual assistants to handle conversations and requests on these platforms. Users can have natural language conversations with the AI agent to get recommendations, generate content, answer questions, and automate tasks over chat.

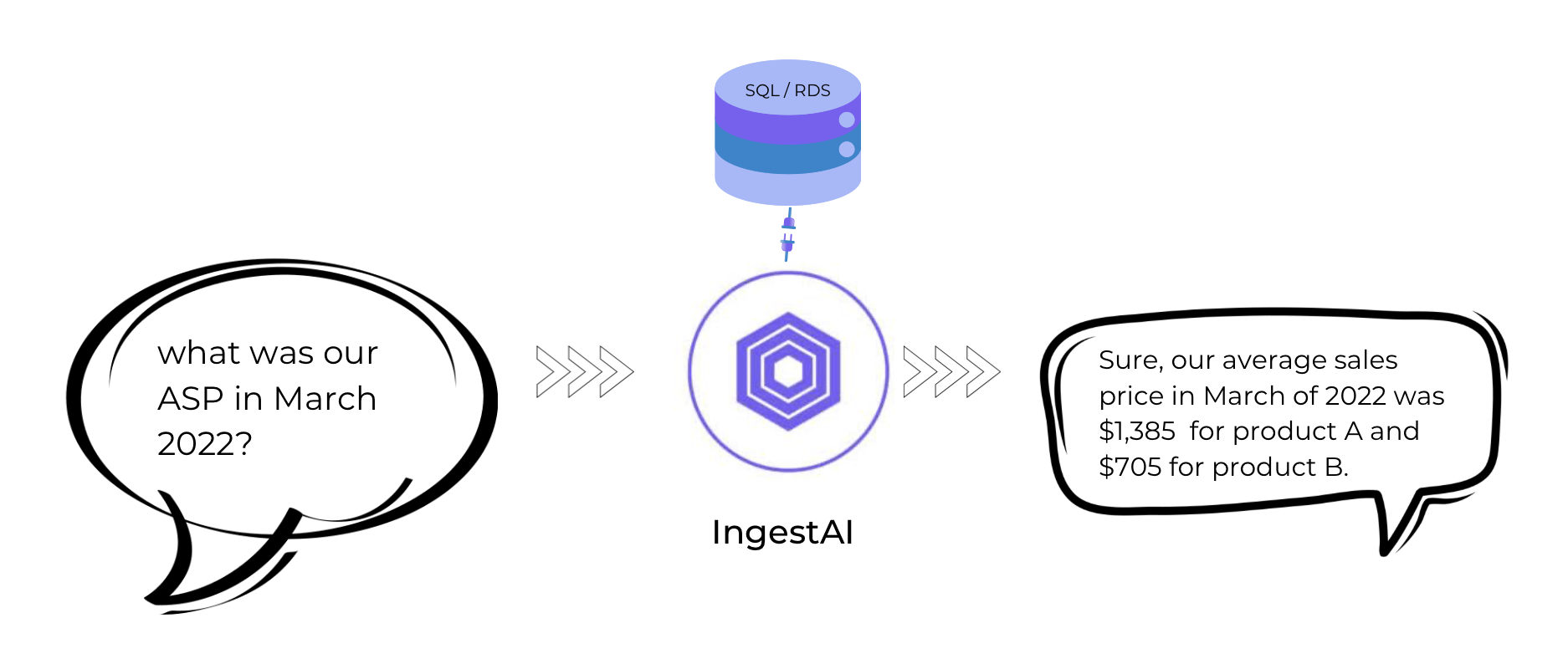

No/SQL Plug-in

IngestAI unique integration into relational and non relational databases offers an AI-powered capability to create SQL and NoSQL queries from natural language questions, eliminating the need of technical knowledge and concerns about query syntax or structure. Users can simply type English-language questions about their data, and IngestAI generates queries, enhancing accessibility of data for direct retrieval or as part of an AI application.

High level IngestAI No/SQL plug-in

LangChain Plug-in

Built-in LangChain with API Plug-in: It’s a unique feature that extends functionality of our platform with built-in functionality of LangChain and LlamaIndex frameworks, with just using a [!API] curl request, so even if there’s something that the platform can’t do, now it can be added with low-code, using built-in LangChain functionality.

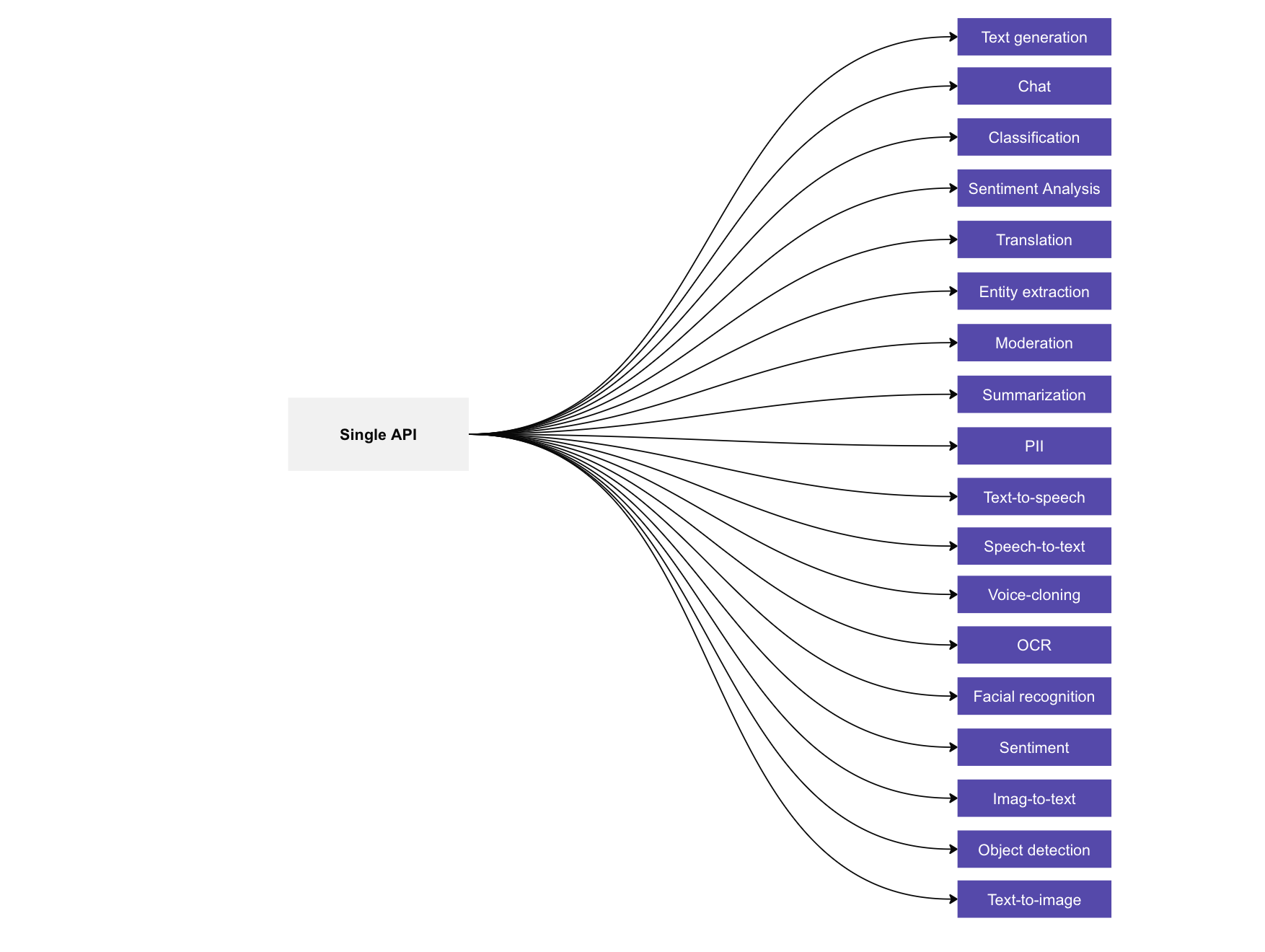

Multimodel API

Dozens of the AI models from different suppliers (e.g. OpenAI, AWS, StabilityAI, Google Gemini, or Midjourney) as well as any app that chains multiple models parallelly, sequentially, or with a logical block, can be accessed through the same format API, changing only one line of code. This significantly simplifies and streamlines the development of new apps, especially outside of the IngestAI development environment.

IngestAI single API to multiple AI models

Plug-in to Any External API

IngestAI platform stands as a gateway to limitless possibilities, empowering users to seamlessly connect to any external API, such as real-time weather updates or any other diverse external data sources. Utilizing a CURL function, this feature allows for the effortless integration of current and relevant external information, enhancing the platform's functionality and enabling a more dynamic and informed AI experience. This capability not only broadens the scope of accessible data but also ensures that AI Builder remains agile and adaptable to ever-changing external inputs.

Integrations

The IngestAI platform offers API endpoints for integrations with productivity apps like Notion and Google Docs to bring AI directly into users' workflows. For example, the Notion integration allows using IngestAI's AI models to generate content, summarize texts, extract keywords, and more from within Notion documents.

Some Examples of Apps Built On IngestAI

AI Learning Assistant for UC Berkley

An AI-powered learning assistant has been implemented in UC Berkeley to support its online course in Machine Learning and Artificial Intelligence. The chatbot, which was created by IngestAI, uses the program learning materials, including vocabulary, video transcripts and reference guides that were used to fine-tune the ChatGPT model. It has been integrated into the university's Slack workspace, offering students personalized and timely assistance.

AI Chatbot for 22k Discord community members

The IngestAI Chatbot is an AI bot that is not only capable of functioning within a web interface, but it also seamlessly integrates with widely used messaging platforms such as Slack, WhatsApp, and Discord. This integration enables businesses to establish self-service systems, facilitating prompt responses and efficient resolutions to customer inquiries deflecting up to 70% of contacts. As a result, businesses can elevate their overall customer experience while simultaneously reducing operational costs.

To witness this in action, watch the AskBrett video on YouTube or experience it on Discord through AskBrett.

AI Web-chat

The AI-powered web chat built on IngestAI harnesses AI to provide an intelligent and customized self-service solution for the users of the JustFund web-site. This conversational interface allows customers to ask questions naturally to gain insights about the company's offers, products and services.

The AI model is equipped with a knowledge retrieval system to tap into the company's up-to-date product specifications, pricing and promotional information. This allows the web chat to provide accurate responses to common queries about products, orders and accounts. As a result, customers can quickly find the information they need. This web chat not only improves customer engagement levels, but it also elevates satisfaction by reducing the need for support calls through an intelligent self-service option.

To see the web chat in action visit the JustFund website.

Customer Voice–Data Analytics

The customer voice interactions analytics application is a powerful tool for businesses that want to automate compliance and quality assurance, while reducing costs up to 40%. By analyzing 100% of the customer interactions, businesses can generate actionable insights from customer service data, leading to improved customer satisfaction.

For a demonstration of its basic capabilities, watch the YouTube demo video.

These are only a few examples of AI-powered applications that one can build using the IngestAI platform. IngestAI users can easily assemble a range of other AI-powered applications. These applications can be further customized and tailored by the IngestAI team to suit any specific business requirements.

Key Benefits of AI-Powered Applications

Integrating AI-powered application into your business can yield to many advantages, such as:

- Enhance productivity: AI assistants and automation capabilities enable businesses to streamline processes, automate repetitive tasks, and free up valuable time for employees to focus on higher-value activities.

- Enhance customer experience: AI applications can analyze customer interactions, provide personalized recommendations, and automate customer service processes, resulting in improved customer satisfaction and loyalty.

- Drive innovation: By harnessing the power of AI, businesses can unlock new opportunities, develop innovative products and services, and gain a competitive edge in the market.

- Improve decision-making: AI-powered applications can analyze large amounts of data, identify patterns, and provide insights that can support informed decision-making.

The key benefits of IngestAI Platform

In particular, the integration of the IngestAI platform into your business can lead to many benefits, including:

- One-stop shop: Users do not need to source, develop and maintain different AI solutions, setting up various accounts and dealing with credentials and payment methods in different interfaces.

- Reduced costs: No large upfront investments or management of multiple vendors. Pay only for the AI applications used.

- Shorter time to value: Up to 70% faster implementation and go-live with ready-made AI applications.

- Scalability: Scale individual applications or the entire platform as business needs grow.

- Reusability: Reusing code and integrations accelerates development, ensuring consistency and reliability, while freeing up resources to focus on innovation. This approach streamlines maintenance and enhances the overall efficiency of prototyping and application development.

- Focus on value generation: IT and AI resources can focus on strategic initiatives and creative tasks rather than coding. Our team has consultants to support additional feature requests beyond the scope of the app.

- Future-ready: The platform will continue to evolve and incorporate the latest advances in generative AI.

Summary

IngestAI is a comprehensive AI platform that allows non-technical users to develop AI-powered applications. With its unique "AI-in-a-box" approach, it offers a wide range of potential applications such as process automation, search, virtual agents and more, making generative AI more accessible and beneficial to businesses, particularly SMEs. Since its launch in February 2023, IngestAI has rapidly gained traction, counting dozens of thousands of individuals in its user base and some publicly traded companies among enterprise customers.

The platform includes core components such as the patent-pending AI Aggregator, which makes the selection of AI models easier, App Store with pre-built customizable applications, and the AI App Builder, which orchestrates and manages the build process and workflow of the application. Additionally, IngestAI provides out-of-the-box integrations with productivity apps and messaging platforms through its multimodal single API.

IngestAI allows users to build AI-powered applications such as chatbots, copilots, automation and productivity tools, and advanced search engines, which can then be customized to meet specific business requirements. These AI applications enhance productivity, customer experience, and informed decision-making, while fostering innovation.

The key benefits of the IngestAI Platform include cost reduction, shorter time to value, scalability, and focus on core business. It’s aimed to serve as a one-stop shop for all AI needs and is designed to evolve with the latest generative AI developments.

Subscribe to our newsletter

We’ll never share your details. View our Privacy Policy for more info.