Can AI ever be truly intelligent if it lacks factual knowledge? This question highlights the limitations of current Large Language Models (LLMs). While LLMs have shown remarkable capabilities in generating coherent and fluent text, they often struggle with factual accuracy and knowledge representation.

What are LLMs?

Large Language Models are powerful AI models trained on vast amounts of text data. They excel at tasks such as text generation, summarization, and translation. However, LLMs are prone to hallucination (generating plausible but incorrect information) and factual errors due to their reliance on patterns in the training data rather than grounded knowledge.

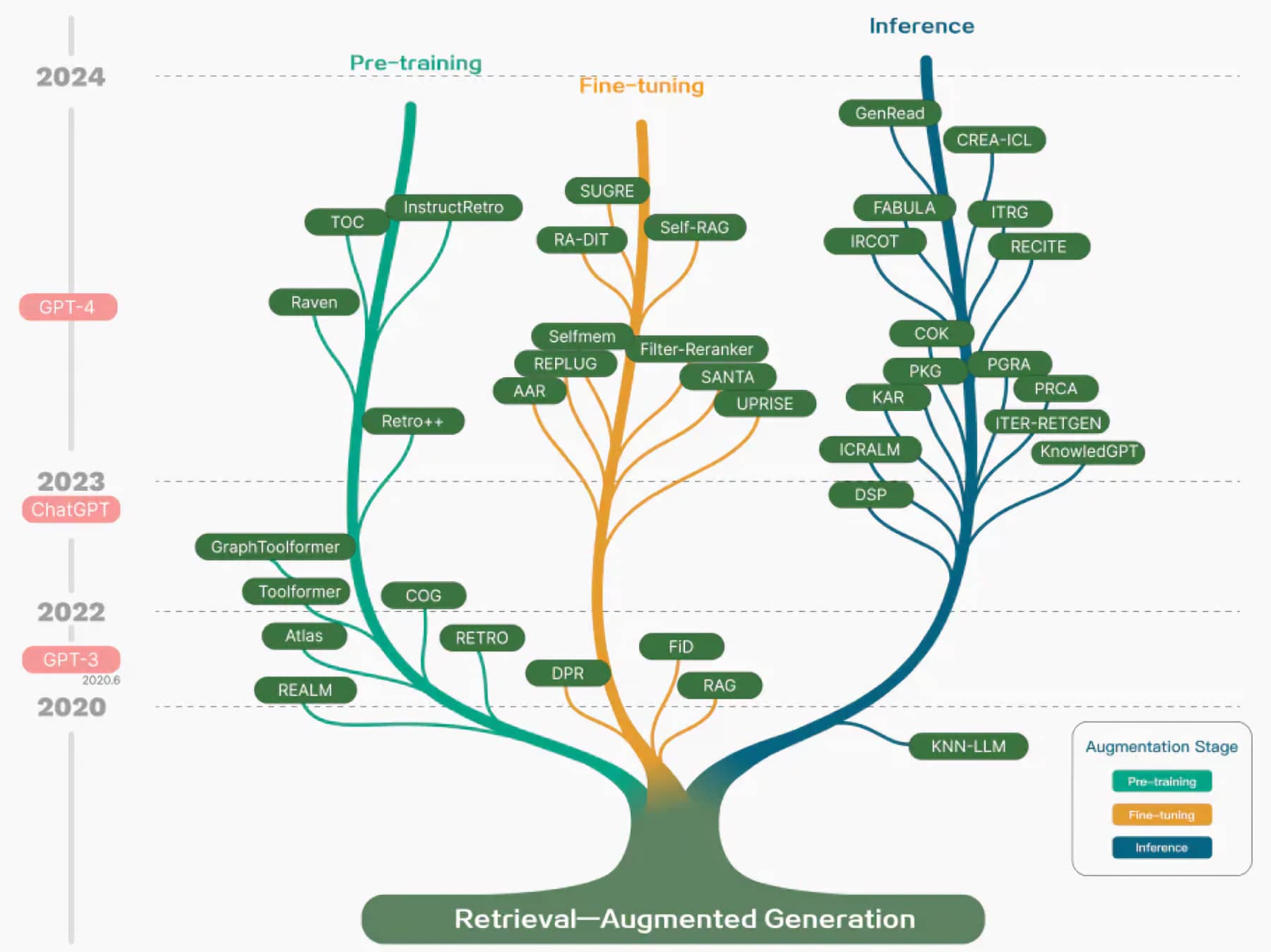

RAG Retrieval-Augmented Generation (RAG) has emerged as a novel approach to address these limitations. RAG enhances LLMs by enabling them to access and integrate relevant external knowledge during the generation process. This article explores how RAG works, its benefits over traditional LLMs, and its potential to revolutionize AI systems.

How is RAG different?

The core concept of RAG lies in its two-module architecture:

Retriever module: Searches and retrieves relevant information from external knowledge sources based on the input prompt. Generator module: Receives the retrieved information and the original prompt, then generates an informed response using this augmented context. By grounding the LLM in factual knowledge, RAG aims to minimize factual errors and produce more accurate outputs. This makes RAG particularly suited for tasks requiring factual reasoning and knowledge integration, such as question answering or generating educational content.

RAG also enables LLMs to stay current with evolving real-world information without the need for retraining. This adaptability is a significant advantage over traditional LLMs, which are static once trained.

In the following sections, we will delve deeper into the workings of RAG, explore its benefits and applications, and discuss the motivations behind its development and its future potential. Whether you're a developer looking to create an AI assistant or simply curious about the latest advancements in language models, understanding RAG is crucial for anyone interested in the future of AI.

RAG Explained

Let's take a closer look at the two key components of RAG: the retriever module and the generator module.

Retriever Module

The retriever module is responsible for searching and retrieving relevant information from external knowledge sources based on the input prompt. This process typically involves the following steps:

Indexing: The external knowledge sources (e.g., databases, Wikipedia) are indexed to enable efficient search and retrieval.

Query Optimization: The input prompt is analyzed and transformed into an optimized query for effective retrieval.

Retrieval: The retriever searches the indexed knowledge sources and retrieves the most relevant information based on the optimized query.

The retriever module can utilize various techniques, such as dense retrieval or sparse retrieval, depending on the specific implementation and requirements.

Generator Module

The generator module receives the retrieved information along with the original prompt and uses this augmented context to generate a more informed and accurate response. The key steps in the generator module are:

Context Integration: The retrieved information is integrated with the original prompt to form an enriched context for generation.

Generation: The LLM generates a response based on the integrated context, leveraging both the external knowledge and its own learned patterns.

Output Refinement: The generated response may undergo additional refinement, such as filtering or re-ranking, to ensure the most relevant and accurate output.

By combining the power of retrieval and generation, RAG enables LLMs to produce more factually grounded and contextually relevant responses.

Benefits of RAG over Traditional LLMs

RAG offers several significant benefits compared to traditional LLMs:

Enhanced Accuracy: By grounding the LLM in factual knowledge retrieved from reliable sources, RAG minimizes factual errors and hallucinations, resulting in more accurate outputs.

Improved Adaptability: RAG allows LLMs to access up-to-date information without the need for retraining, enabling them to stay current with evolving real-world knowledge.

Broader Range of Applications: With its ability to integrate external knowledge, RAG opens up new possibilities for LLMs in tasks that require factual reasoning and domain-specific knowledge.

Benefit | RAG | Traditional LLMs |

Accuracy | High | Low |

Adaptability | High | Low |

Range of Applications | Wide | Limited |

The table above summarizes the key benefits of RAG compared to traditional LLMs, highlighting its superiority in terms of accuracy, adaptability, and applicability.

Motivation and Future of RAG

Motivation behind RAG

The development of RAG is motivated by the need to address the limitations of traditional LLMs in terms of factual grounding and knowledge representation. As AI systems become increasingly integrated into various domains, such as healthcare, finance, and education, the demand for reliable and accurate language models has grown significantly.

RAG aims to bridge the gap between the impressive language generation capabilities of LLMs and the requirement for factual correctness and domain-specific knowledge. By enabling LLMs to access and integrate external knowledge, RAG takes a significant step towards creating AI systems that are not only intelligent but also reliable and trustworthy.

The Future of RAG

As research on RAG continues to advance, we can expect to see further improvements in retrieval techniques and knowledge source integration. Some ongoing research directions include:

Adaptive RAG: Developing dynamic strategies for selecting the most appropriate retrieval and generation approaches based on the input prompt and context.

Multi-modal RAG: Extending RAG to incorporate knowledge from multiple modalities, such as images and videos, in addition to text.

Domain-specific RAG: Tailoring RAG for specific domains, such as legal, medical, or scientific knowledge, to enable more specialized and accurate language models.

The potential applications of RAG are vast, spanning across various industries. Some notable examples include:

Healthcare: RAG-enhanced LLMs can assist in medical diagnosis, treatment recommendation, and patient communication by integrating up-to-date medical knowledge.

Education: RAG can power intelligent tutoring systems and educational content generation by leveraging domain-specific knowledge and adapting to student needs.

Finance: RAG-based models can provide accurate financial analysis, risk assessment, and investment recommendations by incorporating real-time market data and financial knowledge.

Customer Support: RAG can enable more informed and contextually relevant responses in chatbots and virtual assistants by integrating knowledge from product manuals, FAQs, and customer interaction histories.

As RAG continues to evolve and mature, we can expect to see a growing number of real-world applications that harness its potential to create more accurate, adaptable, and knowledge-grounded language models.

Conclusion

Retrieval-Augmented Generation (RAG) represents a significant advancement in enhancing large language models with external knowledge. By combining the strengths of retrieval and generation, RAG addresses the limitations of traditional LLMs in terms of factual accuracy and knowledge integration.

The benefits of RAG, including enhanced accuracy, improved adaptability, and a broader range of applications, make it a promising approach for creating AI systems that are not only intelligent but also reliable and trustworthy. As research on RAG progresses and its applications expand across various domains, we can anticipate a future where language models are more deeply grounded in real-world knowledge and capable of providing accurate and contextually relevant responses.

To further explore the potential of RAG and stay updated with the latest developments, we encourage readers to delve into the referenced research papers and explore the growing community of RAG enthusiasts and practitioners. By understanding and leveraging RAG, developers and AI enthusiasts can create more powerful and versatile language models that push the boundaries of what is possible with AI.

Remember, whether you're aiming to create an AI assistant, improve chatbot performance, or generate high-quality content, RAG offers a compelling approach to enhance the capabilities of language models. Embrace the power of retrieval and generation, and unlock new possibilities in the world of AI and natural language processing.