Generative artificial intelligence (AI) is poised to transform numerous industries through its unmatched ability to produce novel, high-quality data. Unlike other AI techniques focused solely on analyzing existing information, generative AI models learn from vast datasets and generate new data samples, including images, text, audio, and video.

The applications of generative AI span synthetic data generation, creative content production, accurate predictions, and beyond. From creating realistic photorealistic images to composing original music to automating email response suggestions, generative AI unlocks innovative possibilities.

This article will serve as a comprehensive guide, starting with demystifying generative AI and distinguishing it from related concepts. We will then explore the various types of generative AI models like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Autoregressive models.

Next, we will highlight the diverse applications of generative AI across domains, including natural language processing, computer vision, simulation, and drug discovery. We will also cover the crucial use case of synthetic data generation to tackle data scarcity and privacy issues while training AI models.

Finally, we will provide an exclusive list of the most popular and widely used generative AI models like GPT-3 or GPT-4 along with a discussion of their vital features.

What is generative AI and how does it work?

Generative AI refers to a category of AI techniques focused on creating new, original data samples rather than just analyzing existing information. The key principle behind generative AI models is learning from large datasets and then using that knowledge to produce additional synthetic yet realistic data.

This approach differs from other branches of AI like predictive modeling and pattern recognition, which identify trends and relationships in available data to make forecasts or categorizations about future data points.

On the other hand, generative AI models create brand new data points from scratch. For instance, a generative image model can produce photorealistic pictures of people who don't exist, while a generative text model can write coherent articles on arbitrary topics.

The data generation process relies on capturing the intricate patterns and structures within the training data, which the model then replicates when sampling new data points. With recent advances in deep learning and computational power, generative AI models can now generate remarkably human-like outputs across modalities like text, images, audio, and video.

Prominent techniques for developing generative AI models include:

Generative Adversarial Networks (GANs): GANs employ a competitive training approach between two neural networks - the generator and the discriminator - to produce increasingly realistic synthetic data.

Variational Autoencoders (VAEs): VAEs compress input data into a latent space representation and learn an efficient mapping from the latent space back to the data space, enabling diverse data generation.

Autoregressive Models: Such models estimate the probability distribution of future values conditioned on previous values in a sequence, making them suitable for generating time series data.

In the next sections, we will explore these techniques and generative AI applications in-depth. The awesome generative powers of AI are just beginning to be uncovered, with transformative implications across industries.

Types of Generative AI Models

There are several categories and architectural paradigms for developing generative AI models. Let's explore some prominent techniques leveraged to build powerful and flexible generative models:

Generative Adversarial Networks (GANs)

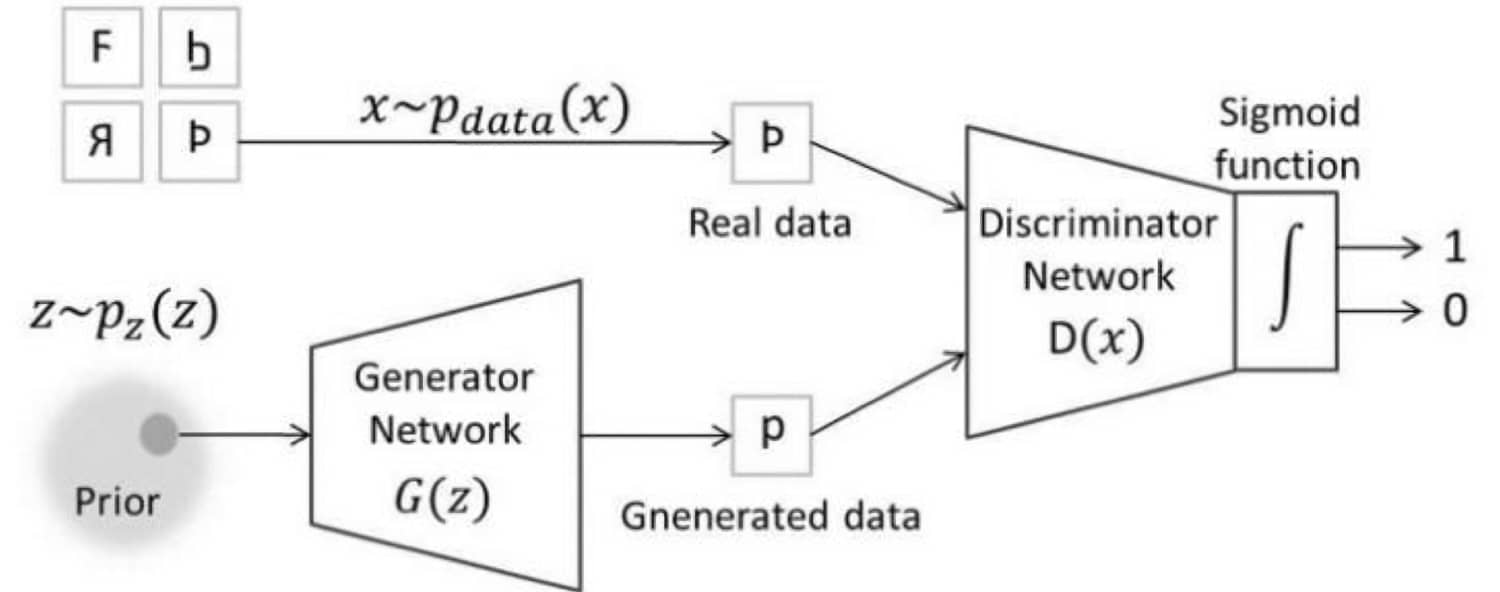

GANs employ an innovative adversarial training process involving two neural networks - a generator and a discriminator.

The generator attempts to produce synthetic samples that mimic real data, while the discriminator tries to differentiate between the generated and actual data samples. Both networks compete and strengthen each other during training until the generator fools the discriminator consistently.

This approach of leveraging the discriminator as an adversary enables GANs to produce remarkably realistic data across applications like image generation, video generation, molecular design, and time series synthesis.

Some popular GAN architectures include Deep Convolutional GANs (DCGANs), Conditional GANs (CGANs), Wasserstein GANs (WGANs), and CycleGANs.

A schematic diagram of the GAN training process involving the generator and discriminator networks.

Variational Autoencoders (VAEs)

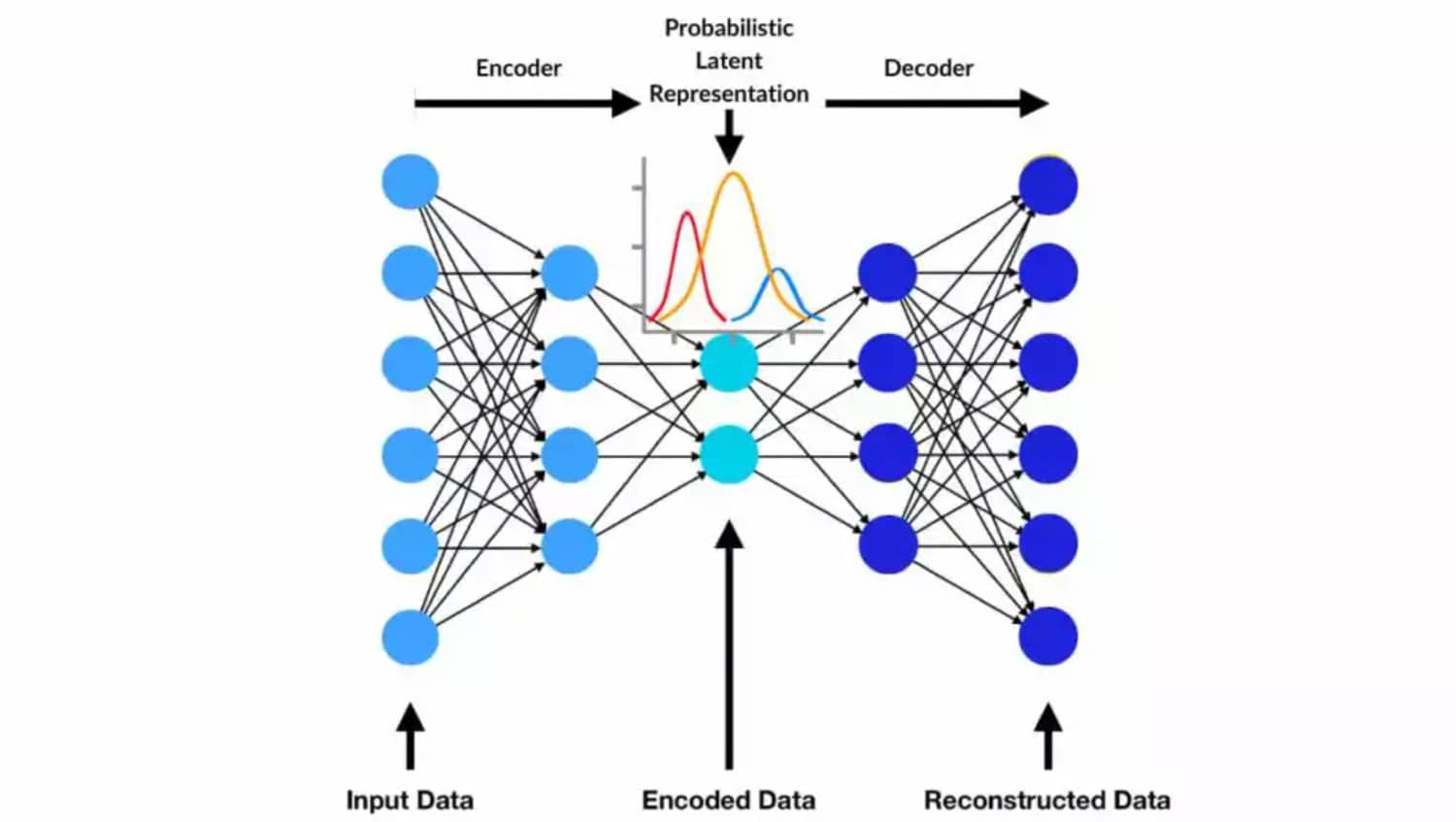

Unlike GANs, VAEs employ an autoencoder structure involving an encoder and a decoder neural network.

The encoder compresses input data samples into a latent space representation, while the decoder attempts to reconstruct the original inputs from points in the learned latent space.

By imposing certain constraints and priors on the latent space, VAEs can sample diverse data points from arbitrarily specified distributions. This grants flexibility in analyzing and manipulating data distributions.

VAEs are extensively used for tasks like molecular design, time series forecasting, text generation, and audio synthesis.

The compression and reconstruction mechanism in Variational Autoencoders (VAEs)

Autoregressive Models

Autoregressive models approach generative modeling from a data sequence perspective. They model conditional probability distributions to estimate future values based on previous values in a sequence.

For example, an autoregressive language model would estimate the next word's likelihood given the previous words in a sentence. This approach makes them suitable for generating coherent text, mathematical sequences, source code, and other structured data.

Prominent autoregressive architectures include Transformer and Recurrent Neural Network (RNN) based models. Models like GPT-3 demonstrate the awesome generative powers unlocked by scaling up autoregressive models.

Flow Models

Flow models take a generative modeling approach based on learning a series of reversible transformations to map data samples from a simple latent distribution to a complex real-world distribution and vice versa.

They offer exact data likelihood calculations and efficient latent space manipulation, making them suitable for specialized tasks like image editing, super-resolution, and data generation with finer control.

Some examples of flow-based generative models include Glow, RealNVP, and FlowGAN.

With an understanding of the core paradigms and techniques underpinning generative AI models, we now move on to exploring the diverse applications they unlock across domains.

Applications of Generative AI

Generative AI models empower innovative applications across domains like natural language processing, computer vision, simulation, drug discovery, and more. Let's explore some prominent use cases.

Natural Language Processing

Language models like GPT-3 exhibit remarkable text generation capabilities that can automate writing tasks. By learning intricate linguistic patterns, they can complete sentences, generate coherent long-form text, translate between languages, and summarize passages automatically.

Applications include:

Creative writing and storytelling

Email and chatbot response suggestions

Automated document creation

Foreign language translation

Text summarization

Computer Vision

Image generation models like DALL-E 2 and Stable Diffusion produce stunning lifelike images from text captions. By analyzing pixel patterns, they can render diverse photorealistic pictures encompassing various artistic styles, compositions, and concepts.

Use cases include:

Concept art and illustration

Game asset creation

Product visualization

Graphic design

Image editing and manipulation

Simulation

Reinforcement learning algorithms leverage generative models to construct realistic simulated environments, enabling efficient testing and validation.

Applications involve:

Testing autonomous vehicle systems

Evaluating robotic algorithms

Training virtual assistants

Modeling biological processes

Audio Generation

Models like Jukebox generate musical compositions mimicking various genres and artists. Similarly, models like Lyrebird can synthesize natural sounding speech from text.

It unlocks applications like:

Automated music mastering

Text to speech

Voice cloning

Therefore, whether it's creating art, optimizing business operations, or advancing scientific progress, generative AI offers an unlimited canvas for innovation across sectors.

Next, we explore how generative models can themselves facilitate and accelerate development of AI systems.

Synthetic Data Generation

While the creative potential of generative AI is captivating, arguably its most valuable application is accelerating the AI development process itself by generating synthetic data.

The Synthetic Data Imperative

Developing AI models requires massive labeled datasets, which are labor-intensive and expensive to create. Sensitive real-world datasets also have privacy and data use restrictions.

Generative models provide a data efficient alternative by producing unlimited simulated labeled data for model development and evaluation.

For instance, an autonomous vehicle model can be trained extensively in diverse simulated environments before ever testing on physical roads. Or a medical diagnosis model can leverage thousands of simulated patient CT scans with perfect ground truth labels.

Realistic and Balanced Data Distribution

The synthetic data should have statistical properties closely matching real-world data in terms of structure, variability, and balanced target class distribution.

This facilitates seamless transfer learning to downstream tasks using actual data. Techniques like GANs, VAEs, and autoregressive models allow close emulation of true data distributions.

Accelerated Model Development

With abundant simulated data available, researchers can rapidly iterate experiments with different model architectures, hyperparameters, and techniques on perfectly labeled data.

This generative AI-enabled simulation to real world transfer technique scales more efficiently than conventional approaches relying solely on manual data collection and labeling.

Therefore, synthetic data generation powers accelerated AI research and enhanced model generalizability before real-world deployment.

Now that we have covered the fundamentals of generative AI, including architectural approaches, applications, and use cases, we will conclude by highlighting some widely adopted and influential models pushing boundaries.

Most Popular Generative AI Models

Several revolutionary generative AI models have recently demonstrated remarkable capabilities in synthesizing realistic data across modalities. Some prominent examples of leading generative models include:

GPT Models

Created by OpenAI, the Generative Pre-trained Transformer (GPT) models are autoregressive language models that predict subsequent text given the previous context. Each successive GPT iteration entails scaling up model parameters and training data to improve textual generation prowess.

GPT-3 features 175 billion parameters trained on petabytes of internet text data. Its human-like text completion skills highlight the utility of scale and foundation models. Its successor GPT-4 promises even greater performance.

Users can engage in free-flowing dialogues, with the model formulating coherent, nuanced, and contextually relevant responses on arbitrary topics. This showcases their versatility across natural language processing (NLP) applications spanning content creation, translation, summarization and more.

Stable Diffusion

Stability AI's Stable Diffusion, an open-source text-to-image generative model adopting a diffusion model approach. As a leading model pioneering creative visual content generation from textual descriptions, Stable Diffusion produces stunning high-fidelity pictures showcasing photorealism and artistic creativity at scale.

The articles also explore complementary image generation models like DALL-E from OpenAI, which can render highly realistic imagery from caption inputs by combining computer vision and NLP techniques innovatively.

AlphaFold

AlphaFold has advanced protein science research tremendously through its state-of-the-art protein structure predictions capabilities. By accurately modeling protein folding from amino acid sequence data alone via deep learning algorithms, AlphaFold has yielded valuable insights, benefiting domains spanning biology, healthcare, drug discovery, and more.

So in summary, these models represent leading exemplars at the frontier of generative AI research across modalities like text, images, 3D structures, and more. Their flexible architectures demonstrate potential to unlock creativity and progress across sectors.

The Dawn of a Creative Revolution Powered by Generative AI

As illuminated throughout this article, generative AI represents a radically innovative leap in our quest to replicate and enhance human creativity using machines.

Unlike conventional AI confined to pattern analysis, generative models assimilate the intricate structures within data distributions to produce novel artifacts mirroring real-world complexity.

Techniques like GANs, VAEs and diffusion models underpin these creative marvels, while scale and compute intensiveness unlock their true potential. From crafting investment strategies to designing life-saving drugs to envisioning fantastical worlds, generative AI expands the canvas of human imagination.

Yet this computational creativity also introduces pressing questions around ethics, governance, intellectual property and societal impacts which necessitate judicious progress. If harnessed responsibly, Maintaining checks against pitfalls, this technology may usher an era of mass creativity benefiting industries and individuals alike.

Therefore, the aspirations of democratizing creativity now seem within reach. We stand at an inflection point with generative AI, as pioneers stretch boundaries constantly. With radical implications for how we create, consume and critique art and content, glimpses into this creative future both enthrall and caution as we set sail.